Today’s paper is Facebook’s Tectonic Filesystem: Efficiency from Exascale from FaST ‘21. The paper covers the Tectonic Filesystem at Facebook, its implementation, and various design decisions they made. I’ll summarize the paper and do a deeper dive on some of its highlights.

While there is a rich history of filesystem papers and systems, it’s nice to read this Tectonic paper, since many of the oft-quoted papers are getting a bit long in the tooth. The Google File System (2003) paper is almost two decades old but is cited more than Bill Murray in the wild.1 There are some more modern papers, mostly from Microsoft like Windows Azure Storage (2011) and Azure Data Lake Store (2017), but it’s nice to see something from a different company.

Tectonic’s Architecture

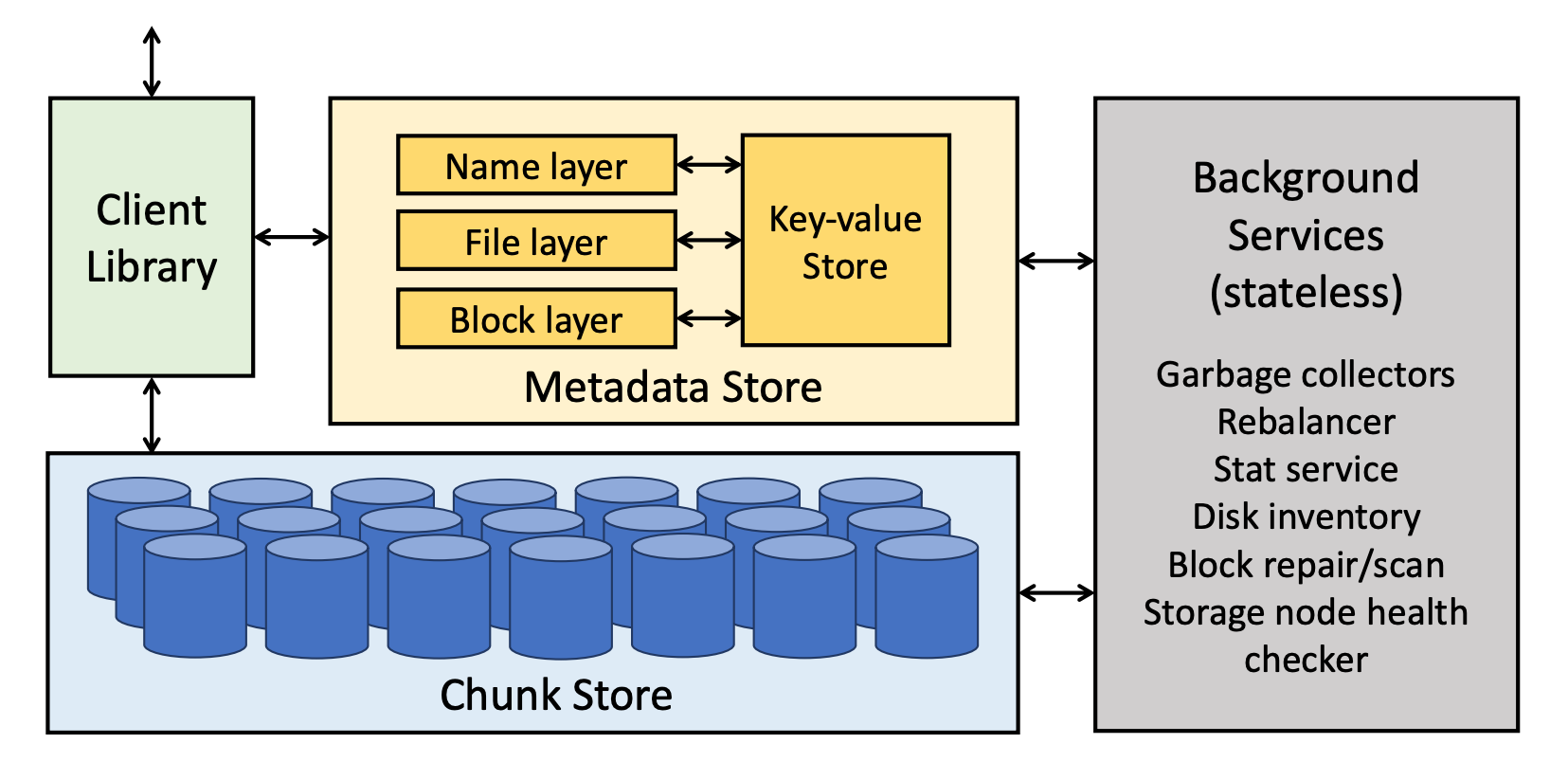

Tectonic uses a thick-client architecture, to enable streaming of data directly from disks to the client, and a sharded metadata service to serve the filesystem. Tectonic shards out the metadata layer into three components: (1) Name layer, (2) File layer, and (3) Block layer. Each of the layers are responsible for a part of the metadata, and all of them are implemented as stateless microservices on top of ZippyDB, a horizontally scalable key-value store. Since the metadata layers are completely separate, each layer can be independently scaled to handle its load.

The Name layer provides the directory tree abstraction, mapping directories to the files and directories they contain. The File layer maps from files to their constituent blocks. Blocks are an abstraction for a contiguous string of bytes that hide how they are stored or encoded. A file is just an ordered list of blocks.

In Tectonic, blocks use either replicated encoding, where \(N\) identical chunks each store a complete copy of the block, or use Reed-Solomon encoding where the block is encoded into \(X\) data chunks and \(Y\) code/parity chunks. The Block layer contains the mapping from a block to its encoding and its set of chunks.

The final piece of Tectonic is the Chunk Store which is its own distributed data-store where nodes store the raw byte chunks and some minimal metadata to map from chunk id to the bytes stored on a local, modified XFS filesystem.

Metadata Schema

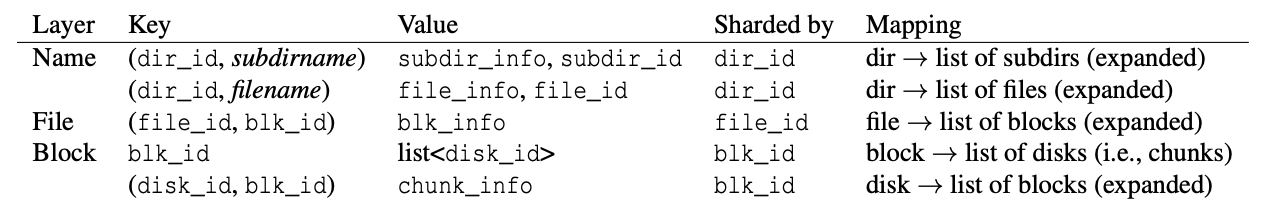

The schemas of the Name layer, File layer, and Block layer are in this table from the paper:

The Name layer maintains the mapping from directory to the subdirectories and files contained within it, and is sharded by the directory’s dir_id. The File layer maps from files to its list of logical blocks and is sharded by the file_id. The Block layer maps from blocks to the list of chunks/disks that store it and is sharded by the blk_id. The Block layer also maintains a mapping from every disk to the list of blocks that are stored on it. This reverse mapping is useful for maintenance, e.g., when a disk is lost this mapping allows background maintenance tasks to enumerate exactly which blocks need to be up-replicated or otherwise reconstructed.

ZippyDB groups keys together into shards, and guarantees that all key, value pairs with the same sharding id, are placed into the same shard. This means that Tectonic’s Name layer, which shards by dir_id, can quickly serve from a single shard a strongly consistent list of all subdirectories and files in a directory, and its File layer, sharded by file_id, can do the same for the list of all blocks for a file. However there’s no guarantee that subdirectories are placed on the same shard as the parent directory, and ZippyDB does not provide any cross-shard transactions, so most recursive filesystem operations are not supported.

Tectonic’s ZippyDB schema also stores lists as fully “expanded” combinations of (key,value) pairs. That is, for a directory foo containing subdirectories bar and quux, Tectonic stores two separate keys (foo, bar) and (foo, quux), and handles list requests for directory foo with a prefix-key scan of foo,. This expanded list format is used to reduce the cost of adding or removing single entries to large directories that already have many millions of files, since Tectonic does not have to deserialize, edit, serialize, and write back a million entry list.

Single-writer, append-only semantics

Tectonic has single-writer, append-only semantics – meaning Tectonic prohibits multiple concurrent writers to a file, and files can only be appended to. To ensure single-writer semantics, whenever a client opens a file it is given a write token that is stored with the file in the metadata layer. Any time a client wishes to write to the file, it must include the write token, and only the most up-to-date write token is allowed to mutate the file metadata and write to its chunks.

Client Data Path

While the control path goes through the metadata layer and ZippyDB, the data path is direct from the client to the chunk storage nodes. This reduces the networking bandwidth required for the system as a whole, as well as the compute required to move the bytes over the network.

Tectonic’s thick-client has very fine-grained control over its reads and writes, since it goes directly to the chunk storage nodes to read and write individual chunks. This allows the client to be tuned for different workloads, e.g., whether to optimize for durability or latency of writes. The Tectonic client can also tune these parameters per file, and even per block, as opposed to other systems that may enforce a single configuration across the entire filesystem.

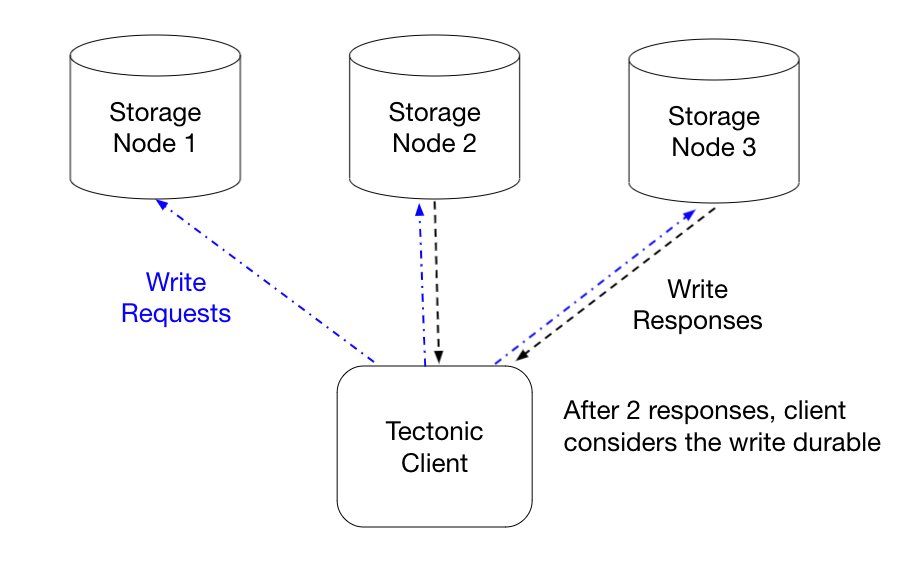

Optimizing Full Block Writes: Write Reservation Requests

Tectonic uses the common quorum commit, or quorum write technique, to optimize clients doing full-block writes – only requiring a client to durably write to a majority of all chunk replicas of a block in order to commit the block. To illustrate, for a \(R(3.2)\) replicated file with 3 replicas2 Tectonic will only require the client to finish writing to 2 of the replica chunks before considering the block committed – which helps tail latency3. If the final 3rd replica chunk write fails, Tectonic’s background maintenance services will fix up the block by repairing the 3rd chunk replica.

In addition to this common optimization, Tectonic uses a neat technique called Reservation Requests to optimize full-block writes, that are similar to hedged requests discussed in The Tail At Scale. To motivate reservation requests, we’ll first take a little detour and look at hedged requests, their benefits and drawbacks, and why reservation requests might be more suitable for Tectonic’s use case.

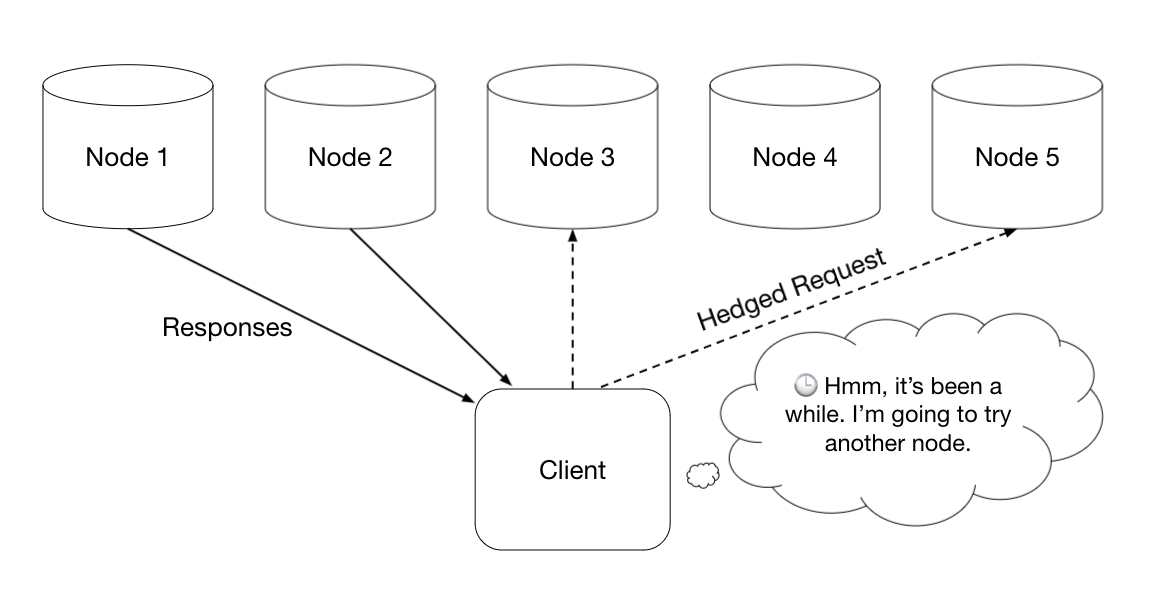

Hedged requests are a latency optimization where instead of sending a single request for a piece of work, you send the request to potentially multiple servers, and accept the first response from the fastest server. The key to not just duplicating a lot of work though is that instead of immediately sending the request to multiple servers, you wait for a bit, where “a bit” means how long you’d expect a normal request to take. If the reply still hasn’t arrived, then you “hedge” your bet that this server will respond in a reasonable timeframe and send the same request to another replica.

Hedged requests help improve long-tail latency, since you avoid the situation where the client has to wait for a possibly unhealthy node to respond to its request before making any progress. However, a problem with hedged requests is that they can create a blow-up in the amount of work done by the receiving servers, as well as the additional bandwidth you need for the extra requests and responses – which is of concern for a storage system like Tectonic. In addition, Tectonic clients can’t really just choose another random storage node to write their data to, they have to coordinate with the metadata layer so that the metadata accurately reflects which nodes store which chunks.

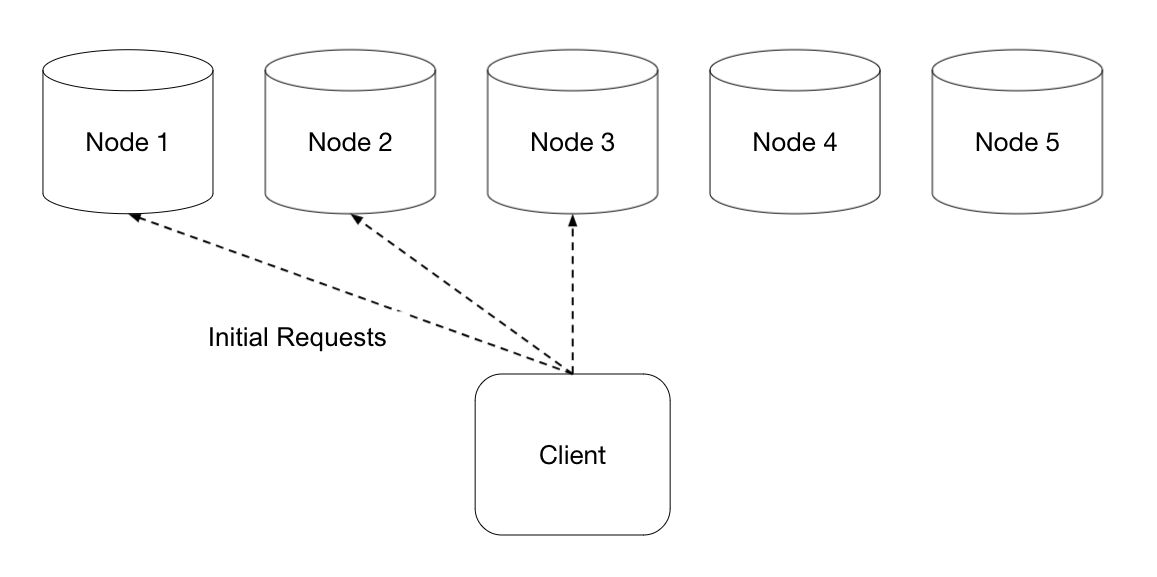

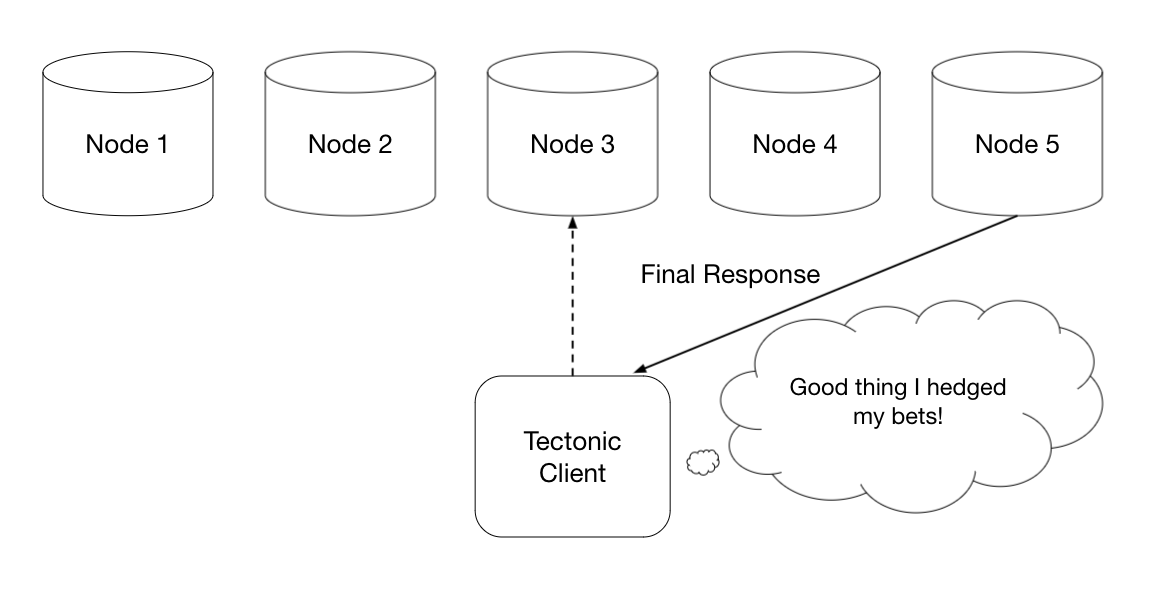

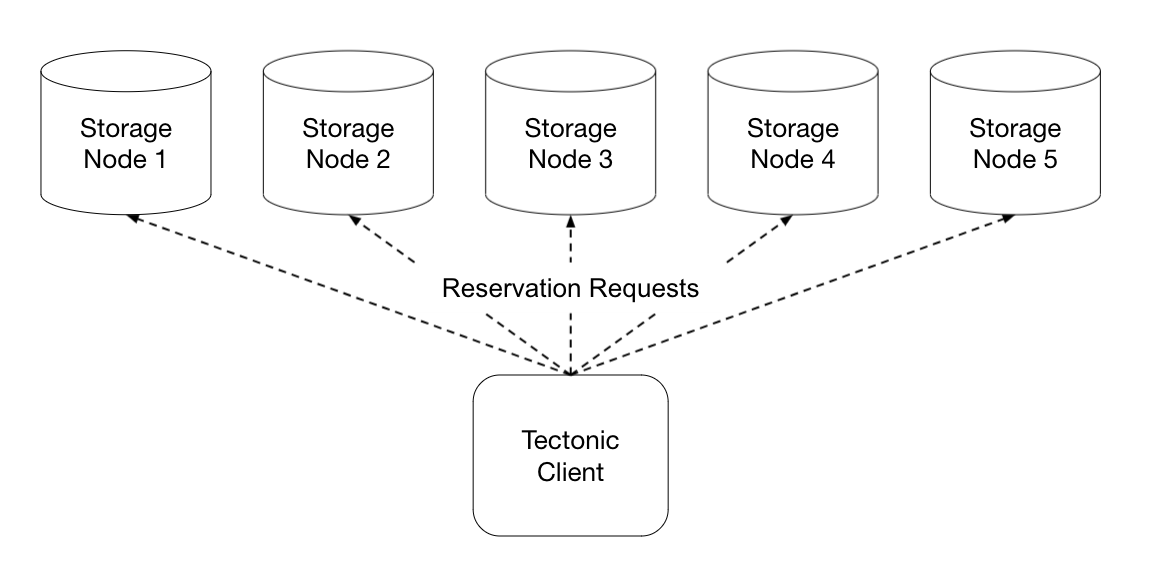

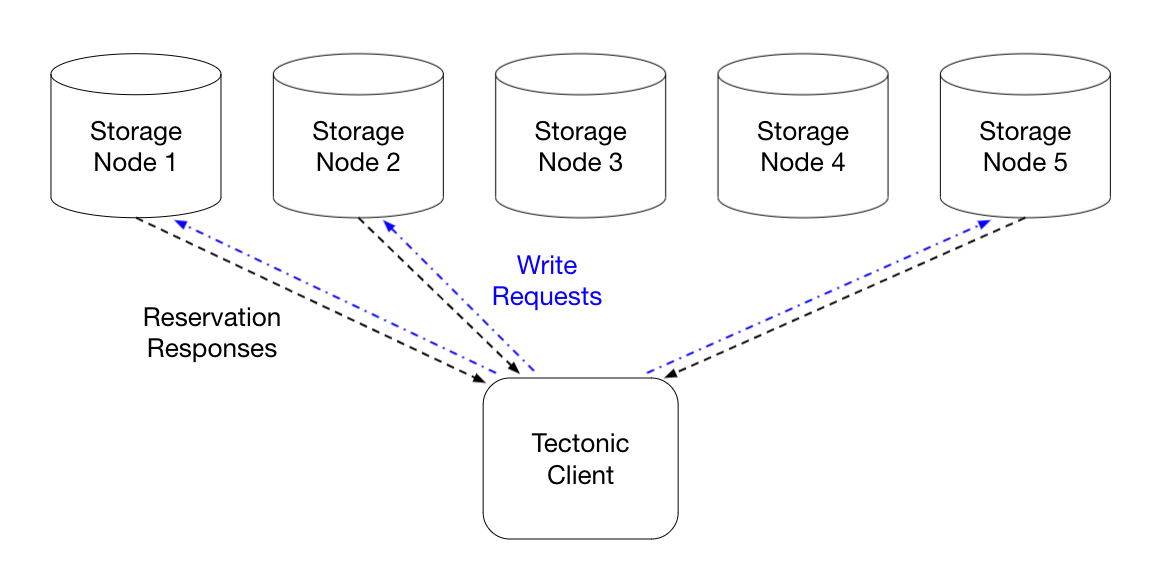

Tectonic instead uses reservation requests, which are small, ping-like, requests that a client sends to multiple chunk storage nodes to decide which nodes to actually write its data to. Tectonic chooses the chunk storage nodes that respond first as the nodes to do the actual writes to, with the hypothesis that nodes that can respond quickly are not currently overloaded. The reservation requests don’t carry any of the data for actual writing, so they use very little bandwidth, and the processing can be super cheap.

For example, if a client was writing to a \(R(3.2)\) replicated file, the client would first send reservation requests to 5 chunk storage nodes.

The 3 storage nodes that respond first are chosen by the client to actually send the 3 write requests to. In this way, the data is still only broadcast to 3 nodes, but the quick health-checking done first via the reservation requests reduces the likelihood that the client will wait on an unhealthy or stuck node, thereby reducing tail latency.

Reservation requests don’t use any extra bandwidth or processing, but do have drawbacks compared to hedged requests. Since full-block writes have to first perform these reservation requests, they add a little bit of latency to each of the writes. This is why reservation requests are only used for full-block writes, where the amount of data being written is large enough that this latency overhead is an acceptable tradeoff for better tail latency. I would assume reservation requests work well in practice, but I’d be interested how recent the health-checking and load-balancing signal provided by reservation requests has to be to avoid the worst of the tail latency. I could imagine a system using less frequent checks might still be able to provide the majority of the benefit of reservation requests, while also reducing the amount of work done.

Consistent Partial Block Appends

Tectonic also optimizes smaller than block-size appends via quorum append, similar to full block writes. The optimization helps reduce tail latency, but for partial block appends instead of full block writes, quorum commit is a wee bit trickier to implement correctly. If you’re writing a whole block, then you don’t have to worry about concurrent writers racing to write to the same block, but with smaller writes this can be a problem.

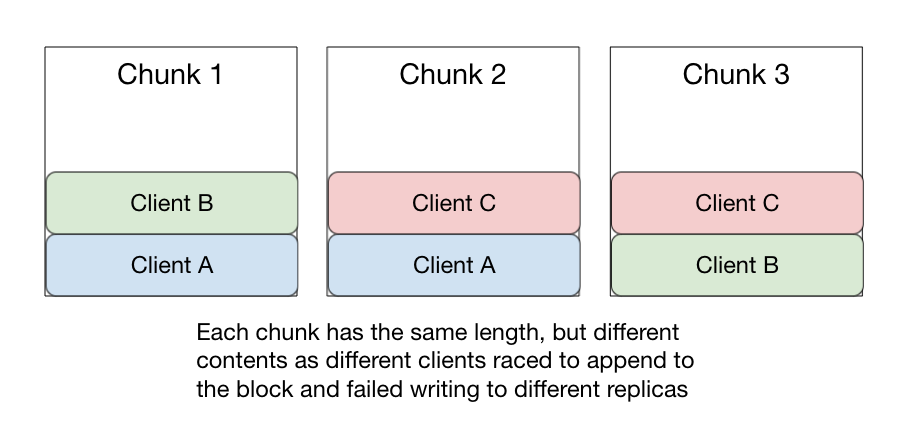

To illustrate the possible bugs that can happen, consider a \(R(3.2)\) file with a 8MB block size and multiple clients appending to a single block. Client A appends 512 bytes, and successfully commits the append to the first and second chunks, but the write to the third chunk replica fails. Then Client B takes control of the file and appends another 512 bytes, but the 2nd chunk replica fails, and the 1st and 3rd succeed. Finally, client C takes control of the file and appends another 512 bytes, but the 1st chunk write fails, while the 2nd and 3rd succeed. You’re left with the 1st chunk replica at length 1024 bytes (1st & 2nd appends), the 2nd replica at 1024 bytes (1st and 3rd appends), and the 3rd replica at 1024 bytes (2nd & 3rd appends).

Readers would see file metadata reporting a block length of 1536 bytes, but none of the actual data chunks are that length! And none of the chunks contain the same data! And it’s unclear exactly what readers might read while these writes are being done! Or if all the chunks will end up with the same data! Or if the rest of the sentences in this blog post will end in exclamation points! 4

The way Tectonic deals with these potential problems is to enforce two rules: (1) Only allow the client that created a block to append to it, and (2) The client commits to the metadata layer the new block-length and checksum before acknowledging the quorum append to the application.

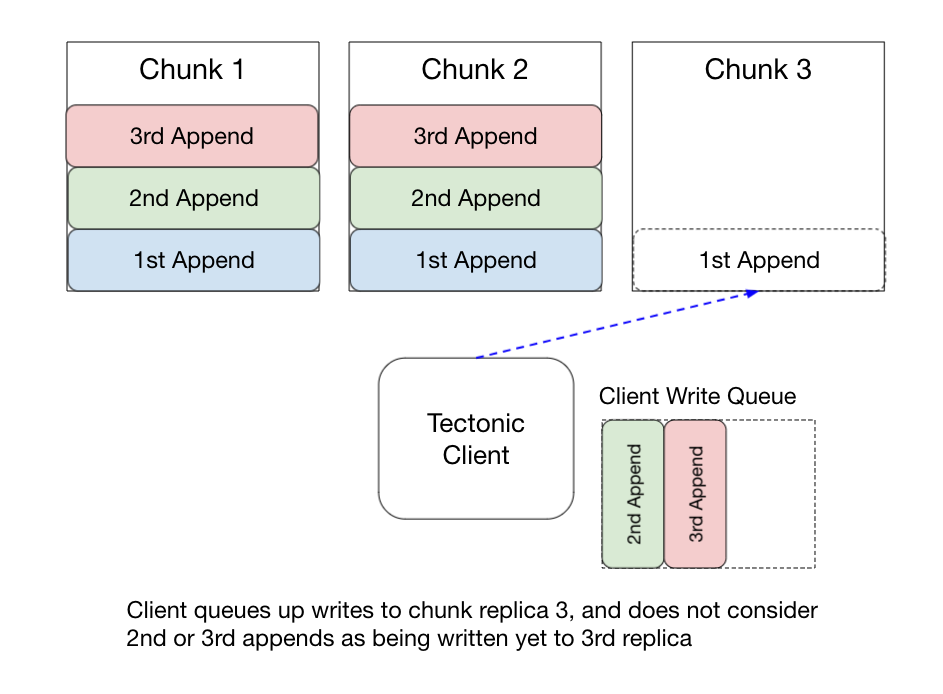

Although the paper doesn’t spell this out explicitly, I presume that a single client will serialize its appends to a given chunk storage node – that is, if it’s performing a first append and a second append, it will wait for the first append to succeed before writing the second append to the storage node. With this serialization done by a client, and enforcing only a single client can write to a block, our race above cannot happen as the client will wait for the 1st append to happen to a chunk storage node, before writing the 2nd append. This makes sure that multiple writers won’t stomp over each other.

Committing the block-length and checksum after each quorum append, but before acknowledging to the application gives readers guarantees about what they can read. It means the application should have read-after-write consistency, since any data that’s been acknowledged by Tectonic to the application, has been durably committed to two chunk storage nodes and the new length committed to the metadata layer. In general, this means that if Tectonic reports a block length of \(B\) bytes, then at least \(B\) bytes have been comitted to two chunk storage nodes, and any client can read up to \(B\) bytes and be guaranteed they’re correct.

Re-encoding Blocks for Storage Efficiency

Many workloads are “write once, read rarely” which would imply that the data should be Reed-Solomon encoded rather than replicated to reduce the storage overhead. However, the data is often written interactively, and the write latency is important to the client so it makes sense to use replicated encoding to reduce tail latency5. It would be nice to take advantage of the improved latency of replicated writes while also having the improved byte efficiency of Reed-Solomon encoding.

Tectonic is able to have its cake and eat it too by having the client write the block in a replicated encoding, but then once the block is full and sealed, the client will re-encode the block to Reed-Solomon in the background. This way the application gets the improved latency of replicated writes, with all the optimizations we discussed, but the space-efficiency of Reed-Solomon storage. It’s unclear from the paper what happens if the client application crashes, but I assume there is a background service that can re-encode fully written blocks to Reed-Solomon.

Copysets: Reducing the Probability of Data Loss

Tectonic uses copyset replication to minimize its probability of data loss in the face of coordinated disk failures. Copyset replication is really smart, but we’ll have to back up a bit to discuss the difficult problems and tradeoffs that exist when trying to place data onto disks.

When choosing which disks to write data to, in a world where disks can fail, there is a tradeoff between the likelihood of data loss and how much data is lost per data loss event. For example, if for all \(R(3.2)\) replicated files, you write each chunk replica to a randomly selected disk, it becomes increasingly likely that any 3 disk failures will result in a data loss event for some replicated file. This is because for every block, we choose a random set of 3 disks to store its chunks on, and as we add more blocks to the system, eventually every set of 3 disks will store all the chunk replicas for some block.

This means that for random disk selection, when we lose disks due to failures in the datacenter, we have the highest probability of a data loss event. However, the amount of data lost per data loss event is very low, because of the low probability that a block will have its chunk replicas on exactly the set of disks that fail since they were chosen randomly. One way to think about this is that if 3 disks fail then the expected value of blocks lost is low, but the probability that at least one block will be lost is high.

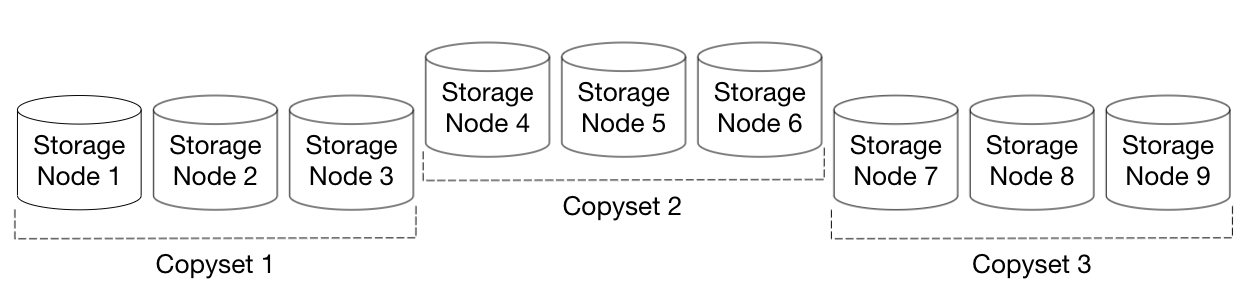

However you could imagine another approach where all the nodes are separated into sets of 3 nodes, called a copyset. When we want to assign chunks of a block to storage nodes, we first choose a copyset for the block, and then the chunk replicas are assigned to the 3 nodes of the copyset. In a datacenter with 9 nodes, nodes \([1,2,3]\) might be one copyset, \([4,5,6]\) would be another, and \([7,8,9]\) would be the last.

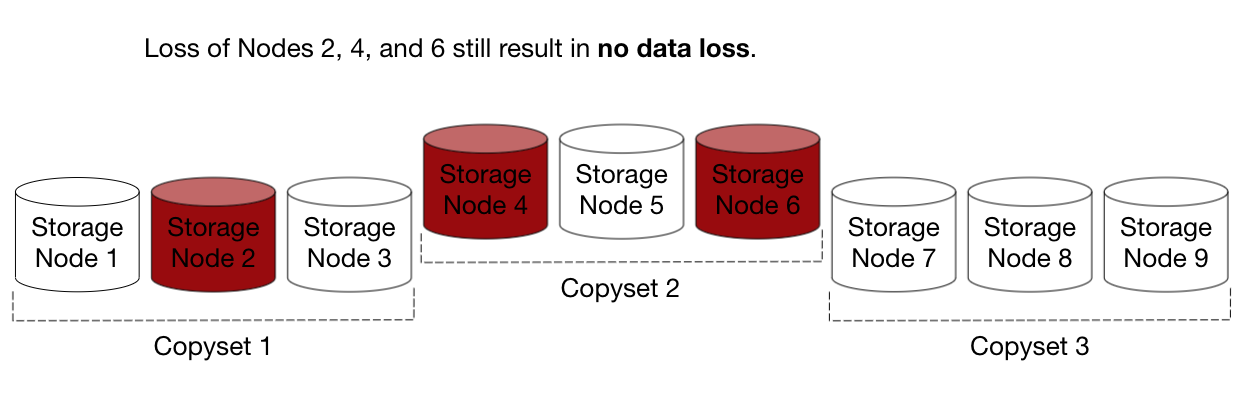

In this scheme, there are only 3 three-node failures that would cause data loss, i.e., only a loss of all the nodes of a copyset would cause data loss for a block. This is a very low probability event given that only 3 sets out of a possible \({9 \choose 3} = 84\) three-node sets cause data loss. In comparison, random node replication will eventually make all 84 three-node sets a copyset for some block, so copyset replication is a 28x improvement over random replication for reducing the probability of data loss. Remember though, that for copyset replication the amount of data lost per data loss event would be quite high – all of the blocks assigned to the copyset would be lost.

While losing all of the data is probably not the right trade off, we’d probably trade off a little bit more data lost per event in order to reduce the likelihood of any data loss event. This is because any amount of data loss incurs such a large fixed overhead of work to recover data that as operators we’d really prefer to avoid it as much as possible. This is our motivation for finding a better way of allocating chunks to disks.

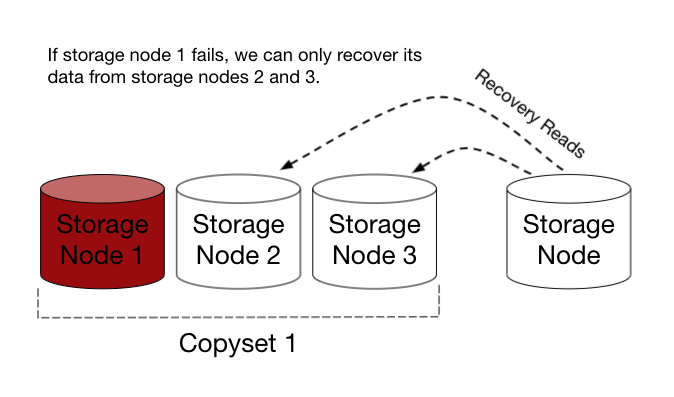

Besides affecting the probability of data loss, how we assign chunks to nodes also affect other properties of the system that our hypothetical solution will have to deal with. In our naive 3 copyset setup, if node 1 failed, we could only recover that data from node 2 and 3, which could potentially increase the load on those nodes by 50%. For the random disk selection setup, if a node fails we can recover the data from all of the remaining nodes, since the data has been spread uniformly across all 84 copysets. So the random disk selection has the edge here in providing good load-balancing of potential recovery work.

However if we broke with our strict copyset setup, and allowed the data on a node to potentially be replicated to a larger number of other nodes, then we could recover from a single node failure faster, and not put as much load on all of the remaining nodes.

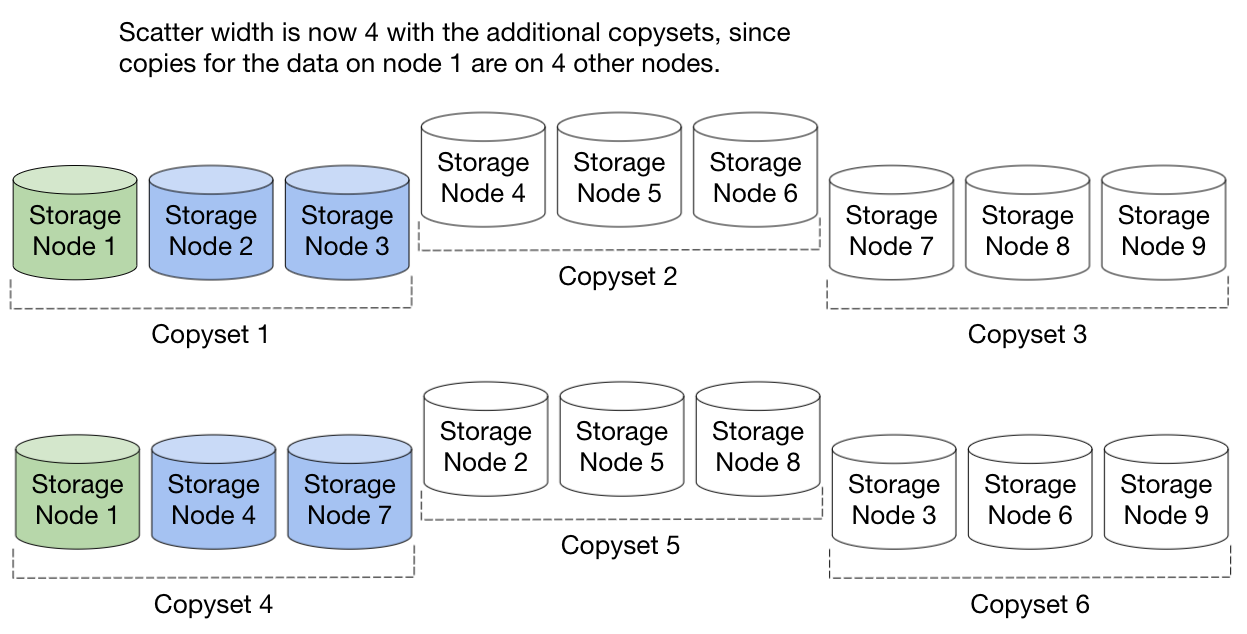

Let’s call the number of nodes that store copies for a single node’s data our scatter width. In our example above of 9 nodes, and 3 copysets, our scatter width is 2, as only 2 other nodes have copies of any other node’s data – node 1 has its data also on node 2 and node 3. However, if we introduced 3 more copysets of \([1,4,7]\), \([2,5,8]\), and \([3,6,9]\) then now the data on node 1 could be on either node 2, 3, 4, or 7, so the scatter width would be 4 now – allowing us to recover from node failures faster.

So to summarize, we’d like to have a high scatter width to ensure we can recover data from lots of nodes, but we also want to keep the number of copysets low in order to reduce the probability of a data loss event. Coming up with a scheme to do both of these things is a non-trivial problem.

The Copysets paper presents a near optimal solution to this problem called copyset replication, that aims to create the fewset number of copysets given a scatter width. To put it another way, copyset replication reduces the probability of a data loss event while still allowing good load-balancing between nodes.

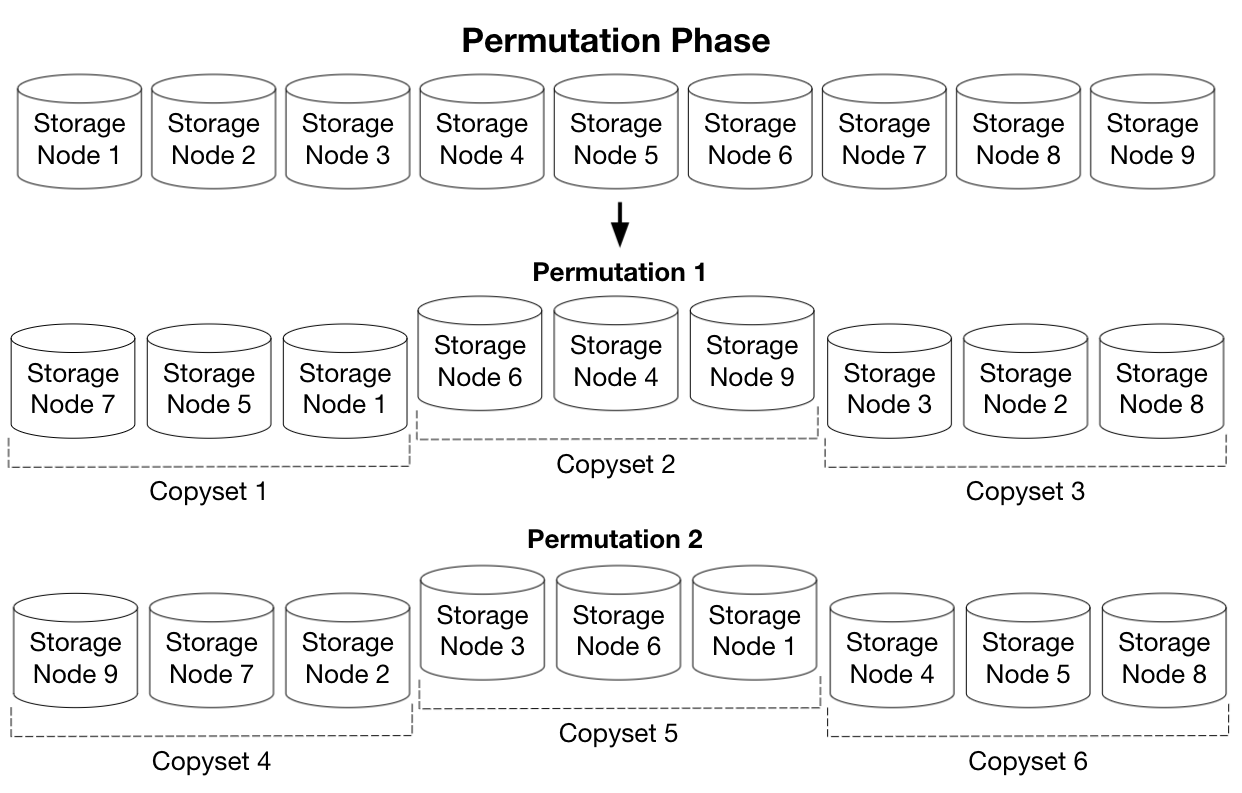

The paper explains how their algorithm is optimal, but the core idea is that you create permutations of all nodes in the system, then form copysets from a permutation by chunking up the permutation into runs of consecutive nodes. You can increase scatter width by creating more permutations, but this will create more copysets since an additional permutation will have a different shuffling of nodes which results in different and more copysets. But the key is that this increase in the number of copysets will be as minimal as possible, keeping the probability of a data loss event as low as possible.

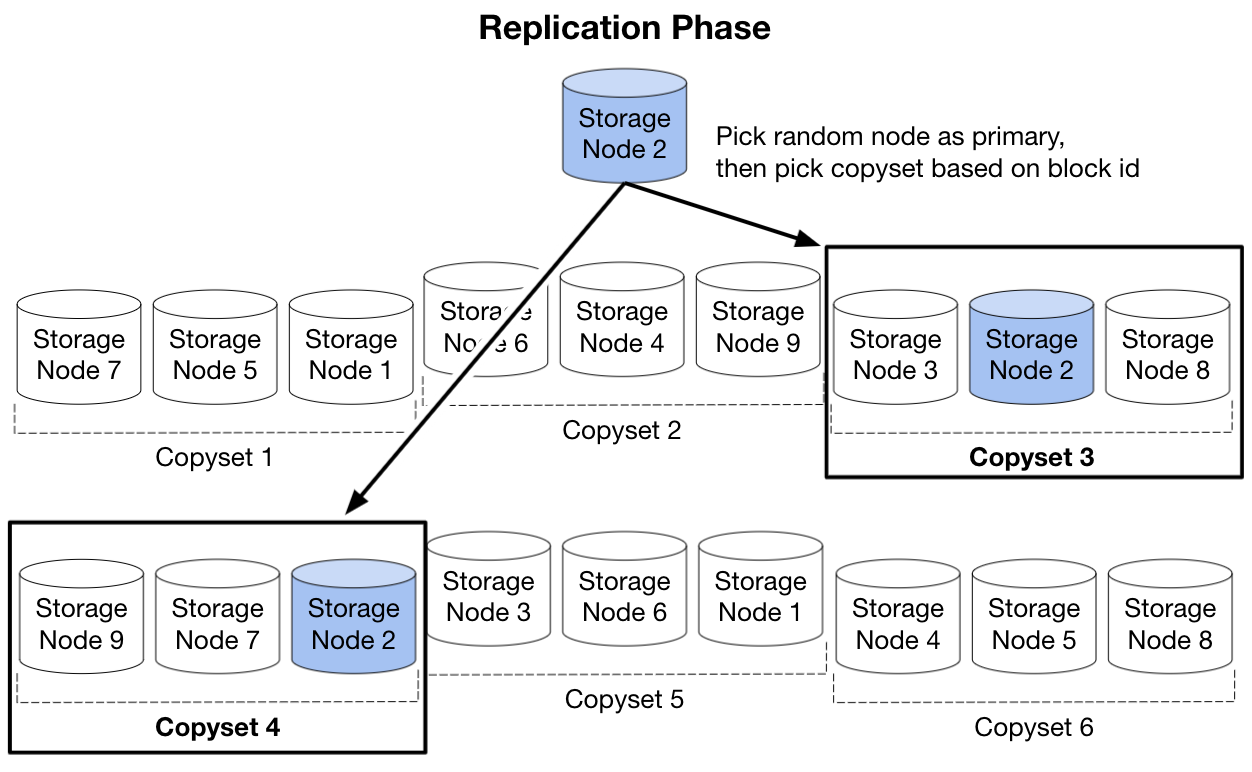

When a client needs to write a block, first a node is chosen randomly as the primary, which constrains the set of copysets down to one per permutation. You could choose a random permutation and its copyset, or in Tectonic’s case the Block layer chooses the copyset from the permutation corresponding to that block ID modulo the number of permutations so that it’s deterministic.

While the initial placement is optimal with respect to the copyset scheme, disks will still fail or be taken out for maintenance, so the background rebalancer service also tries to keep a block’s chunks in its original copyset.

Copyset replication, and Tectonic’s use of copyset replication, is really smart. Any data loss at all incurs quite a large amount of work, so reducing the occurrence of data loss with a small increase in data lost per event, while still allowing for good load-balancing and quick recovery from node failures is quite impressive!

Sealing API and Caching

In order to help scale the metadata layer, Tectonic allows users to seal a block, file, or directory, preventing any future changes. This sealing allows metadata to be cached efficiently, both at the metadata nodes and at the client which allows for Tectonic to serve more traffic. The one exception to full caching is the block to chunk mapping since disks fail and chunks can migrate to new disks via the background maintenance processes. But stale block metadata can easily be detected when trying to access an incorrect chunk storage node, and can be fixed by forcing the client to perform a block metadata refresh.

The paper calls out the scaling and efficiency benefits of sealing, which are certainly significant, but it can also be a very useful API for application developers to ensure correctness. For example, once a finalized read-only dataset is written to a directory by an application, allowing the application to seal the directory and have Tectonic enforce its application invariants is very useful.

Multitenancy

Tectonic is a multi-tenant system supporting resource sharing and isolation. Tectonic only directly supports a dozen large-scale tenants, each of which are large services that themslves serve many hundreds of applications, like News Feed, Search, and Ads.

Tectonic splits up resources into ephemeral (easy to reclaim) and non-ephemeral (hard to reclaim) resources. Example ephemeral resources are CPU or IOPS on the storage nodes. CPU is ephemeral because its usage can burst high with a spike of traffic, but can easily be reclaimed by rate-limiting incoming requests. The only non-ephemeral resource is disk space, which is impossible to reclaim without causing data loss.

Tectonic has a somewhat inflexible, and strict isolation mechanism for its non-ephemeral resource disk space, and a much more fluid resource sharing system for its ephemeral resources. Disk space is statically partitioned between the tenants, with no automatic elasticity in their quota. Tenants are responsible for allocating their own quota amongst their users, and operator action is required to make changes to a tenant’s quota. However the quota can be updated while the system is running by an operator, so it can be done in case of emergency without downtime.

In order to support better sharing of ephemeral resources, Tectonic breaks down tenants further into TrafficGroups. A TrafficGroup is a collection of clients that have similar latency and IOPs requirements. For example, a data lake query engine tenant like Presto might have a TrafficGroup for all its best-effort, exploratory query traffic. Each TrafficGroup is assigned a TrafficClass that dictates at what priority its requests are serviced. The TrafficClasses are Gold, Silver, and Bronze, corresponding to latency-sensitive, normal, and best-effort priorities.

Each tenant gets a guaranteed share of ephemeral resources, with each of a tenant’s TrafficGroups getting some sub-share of its resources. Since there is always slack capacity in the system, because not all tenants are using their full share of resources all of the time, unused ephemeral resources are shared in a best-effort fashion. Any unused ephemeral resources within a tenant get distributed to that tenant’s other TrafficGroups, prioritized by TrafficClass. This allows tenants to get their fair share of resources by default.

Whenever one TrafficGroup uses resources from another TrafficGroup, the resulting traffic gets treated with the minimum TrafficClass of the two. This guarantees that the overall mix of traffic by TrafficClass does not change based on utilization, helping to avoid possible metastable failures. For example consider an idle low-latency Gold TrafficGroup SuperSonic, and another best-effort Bronze TrafficGroup SnailMail using SuperSonic’s spare resources. If SnailMail’s traffic was treated as low-latency, then when SuperSonic starts to use its guaranteed low-latency resources there could potentially be more low-latency traffic generated by both SuperSonic and SnailMail than was originally provisioned for SuperSonic. This can lead to bad latency spikes for SuperSonic when it tries to use its guaranteed low-latency resources, exactly what we’d like to prevent.

The rate-limiting and throttling is done by the client using high-performance distributed counters. This allows the client to potentially throttle itself instead of wasting work sending requests that will be rejected. The paper doesn’t mention it but presumably the metadata layer and storage nodes also make use of the distributed counters to do their own local throttling.

Metadata Hotspots: Hot Directories In Your Area

One problem Tectonic faced in deploying the system is hotspotting in the metadata layer. Even though Tectonic uses hash-partitioning for all of its metadata layers, which should in theory uniformly distribute the load for the system, it does not work that well in practice for the Name layer. For files or blocks, no one file or block can get that hot, but for the Name layer, a single directory can get an avalanche of load that can cause imbalances. Since a single directory can only be served by one ZippyDB node, this can cause load spikes that can render a single node unusable and its data unavailable. This single directory hotspotting is pretty common, as many batch processing systems will store data within a single directory, and spin up many thousands of workers to read data from that directory. The paper mentions specifically data warehouse jobs that follow this pattern of a single coordinator that lists files in a directory, then shards out those files to many thousands of workers, causing a huge spike of load onto the Name layer node responsible for that directory.

Tectonic handles this single directory hotspotting problem via co-design with the data warehouse team by exposing a file listing API that can return both the underlying file ID with the file name. This allows the worker nodes to bypass the Name layer when opening the files and instead go directly to the File layer with the file ID.

The authors mention in the paper that this Name-layer metadata hotspotting actually forced them to separate the Name and File layers, which originally were one layer. Presumably this allows the File layer to be extremely well load-balanced, and can be tuned more aggressively, while the Name-layer can be given more slack capacity to withstand load spikes as necessary.

Check Some Checksums

Tectonic has found that memory corruption is actually very common at scale, even with ECC RAM. Tectonic combats this by including checksums whenever moving data across processes, and even sometimes within a process as well. While the main source of memory corruption is likely actual hardware faults at scale, another common but less considered problem, is client applications introducing memory stomping bugs. These bugs can end up corrupting the data or buffers inside a filesystem client library, causing hard to reproduce bugs or silent data corruption. Adding checksums helps to prevent both these application bugs and hardware faults from causing problems, and while it adds non-zero cost it is a very worthwhile tradeoff.

Managing Reconstruction Load: Striped or Contiguous

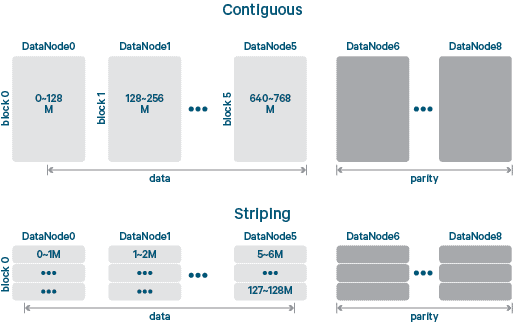

In general, Reed-Solomon encoded blocks can either be stored contiguously, where a block is broken up into \(D\) data chunks and \(C\) code chunks, and each data or code chunk is written contiguously as a single blob to a storage node. Or the block can be written striped where each of the \(D\) data and \(C\) code chunks are subdivided into smaller “stripes” of data that are then round-robinned across a set of storage nodes. The blog post cited in the paper has more details and helpful visuals like this one:

The benefits of storing data blocks contiguously is that most reads are smaller than the block-size, so they can be serviced by just reading from the data block directly on the storage node, making the common case very fast and efficient. If instead the data was striped, then reads would be more likely to cross stripe boundaries, and so reads for a single block would have to fan out to more storage nodes, which requires more IOPs and can introduce extra latency6.

However, the problem with contiguous storage is that if a data chunk were to fail, then reconstruction would have to reconstruct the entire block, instead of a much smaller stripe-sized amount of data. Consider a \(RS(10,4)\)7 encoded file with 10 data chunks and 4 code chunks, with block size of 64MB, and a stripe size of 8MB. A normal 8MB, block-aligned, read for a contiguous file, would just read 8MB of data from a data chunk. However, if that disk fails, then the reconstruction would have to read 10 data or code blocks, each of size 64MB, for a total of 640MB of data. That’s a blow-up of 80x!

If instead the file was striped, then it would still have to do the reconstrution, but it would be reading only 10 stripes, which would be just 80MB. It’s still a 10x increase, but at least it’s not 80x.

Now the problem with doing reconstruction on contiguously encoded files isn’t just the large increase in the amount of data that has to be read, but that this increase occurs unpredictably. Adding more variance to a system is generally not a great way to make a stable system. Introducing a source of random, sudden bursts of extra read load from reconstruction reads is bad because this extra load could potentially overload another disk, causing further reconstruction reads which could then overload another disk, and so on and so forth8. It’s true this same problem affects striped files, but the effect is dampened compared to contiguous files.

Tectonic decided to thread the needle by using contiguous encoding to make the common case fast and efficient, but implementing a hard-cap on the number of reconstruction reads in order to deal with possible reconstruction storms. Tectonic caps reconstruction reads to a max of 10% of all reads. This way there is a bound on any possible spike of reconstruction reads, allowing reads to fail in order to prevent cascading failures and protecting the overall health of the system.

Conclusion

The Tectonic paper is a great read, giving a behind the curtain look at how the authors built and operate a modern datacenter-scale distributed filesystem. I really enjoy the idea of reservation requests and the use of copyset replication and think they both have wide application. Hopefully this has been a helpful summary of the most interesting (to me) parts of the paper. Until next time.

Acknowledgements

Thanks to Bill Lee and Jeff Baker for reading earlier drafts of this post and providing feedback. All mistakes or inaccuracies are my own.

-

ACM says it has 2,729 citations, but that honestly feels low. ↩︎

-

\(R(A,B)\) is notation for replicated file encoding with \(A\) replicas and \(B\) chunks needed to be written to commit a block as written. ↩︎

-

If it’s unclear why reducing the number of chunk writes we have to wait for from 3 to 2 is such a nice improvement in tail latency, perhaps reading my other post on fanouts and percentiles might give some intuition. ↩︎

-

No, they will not. ↩︎

-

Replicated files have improved tail latency via quorum appends, and also have the property that you don’t have to buffer up writes to a block or stripe boundary in order to encode the data before writing it. ↩︎

-

On spinning disks reading a random 4 KB costs the same as reading a random 1 MB since most of the cost is moving the drive head into position. The 1 MB figure is also probably a bit of an understatement, and varies by disk. The point is that you’d prefer to read lots of contiguous data, and so would prefer to read a contiguous 2 MB off of one disk than read 1 MB off of two disks so that you incur only one seek rather than two. ↩︎

-

\(RS(X,Y)\) is notation for reed-solomon encoding with \(X\) data chunks and \(Y\) code chunks. ↩︎

-

Another classic example of a meta-stable failure. ↩︎