The paper I’m writing about today is x86-TSO: A Rigorous and Usable Programmer’s Model for x86 Multiprocessors by Sewell, Sarkar, Owens, Nardelli, and Myreen. The paper tries to provide a framework for systems programmers to reason about the execution of code on x86 multiprocessors. The main focus is being the execution of multi-threaded code on multi-processors, and how we can reason about its many possible executions.

Prior to learning about x86 processors, I had assumed (like most?) that non-synchronized code execution proceeded as an arbitrary interleaving of assembly instructions, where writes from one thread were immediately readable by all others. This still leaves lots of possible total execution orderings, but unfortunately this view is even too simplified.

Processors today have instruction and data caches to help feed the beast of the actual CPU, and this throws a wrench in our simplified world view. Since Spectre and Meltdown I imagine more people than ever are aware of these caches, but there are other pieces of the microarchitecture that can cause problems.

I learned about the store buffer from this paper, which does what it says on the tin. It buffers writes, or stores, to memory before they are ever seen by the memory subsystem, which means that things like the MESI protocol for cache coherency isn’t even happening at this level.

The store buffer, if you’re unaware of it, can cause very unexpected execution paths. The paper does a good job of enumerating many examples of how the store buffer can interfere.

Execution Madness

One example of store buffer induced weirdness is the example labeled SB in the paper.

SB

| Proc 0 | Proc 1 |

|---|---|

MOV [x] ← 1 |

MOV [y] ← 1 |

Final StateEAX == 0 |

Final StateEBX == 0 |

In this example, the first processor is writing the value 1 to the memory address [x] and then reading [y] into the register EAX. The second processor is writing 1 to the memory address [y] and then reading [x] into the register EBX.

The surprising allowed final state is that Proc 0 can have EAX == 0 and Proc 1 can have EBX == 0 as well! If you were like me, and thought that the result of execution would be some linearizable interleaving of assembly instructions, this is quite a shock!

If Proc 0 and Proc 1 both read 0, then clearly, Proc 0 couldn’t have executed its first instruction before Proc 1 executed its second instruction because then Proc 1 would have read the value 1 into EBX. But then that means Proc 1 must’ve executed its first instruction and written 1 into [y]. But HOLD THE GOD DAMN HORSES, if that had happened then Proc 0 should have read 1 into EAX so now everything is a lie. Great.

So what’s really going on here?

Store Buffering. There’s no guarantee by the microarchitecture that a MOV instruction will actually cause a write to the memory subsytem immediately before we move onto the next instruction. For those kind of guarantees there are other instructions you can use like MFENCE or SFENCE. But otherwise a x86 processor is free to buffer the write of an instruction and move right on to the next instruction.

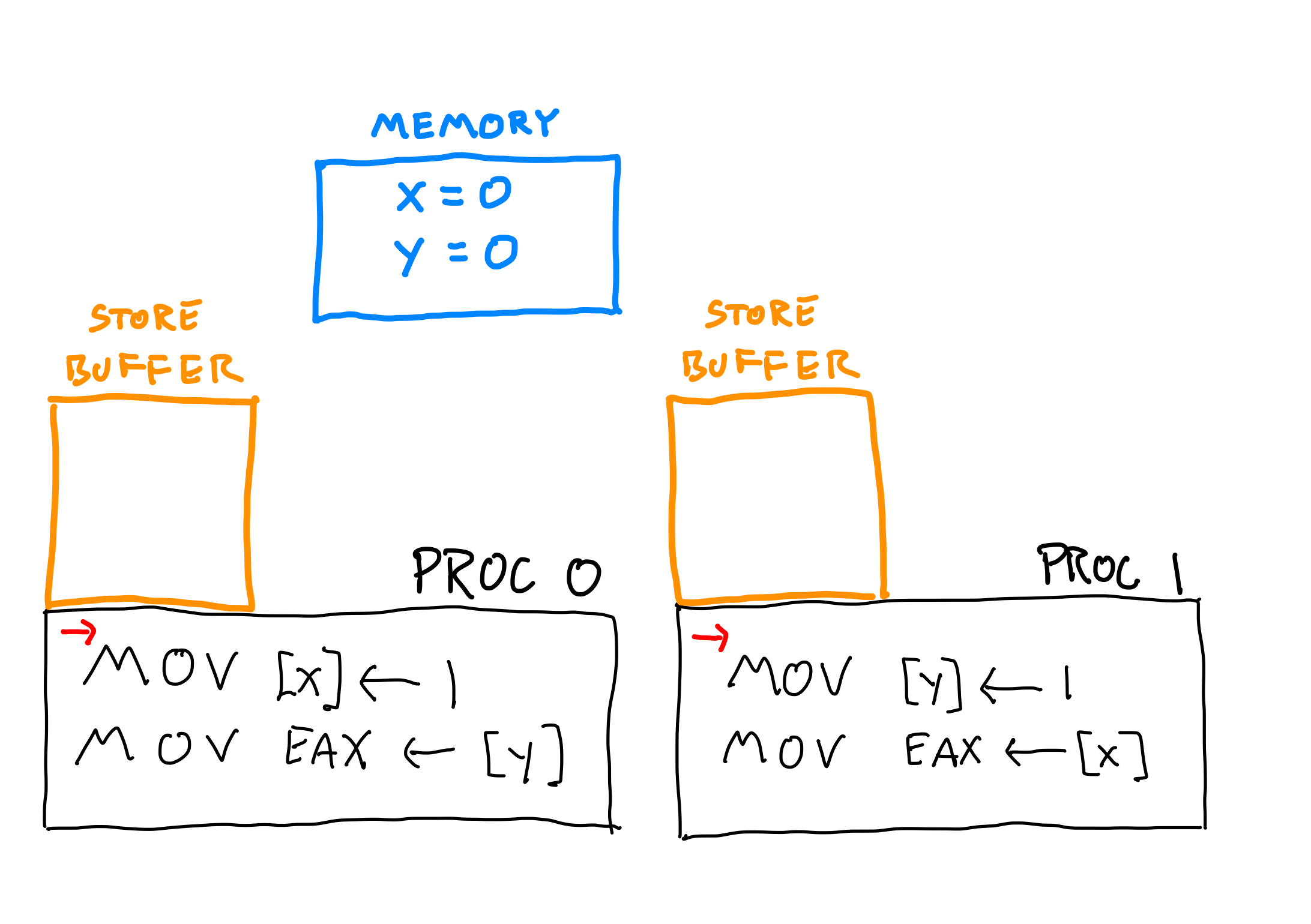

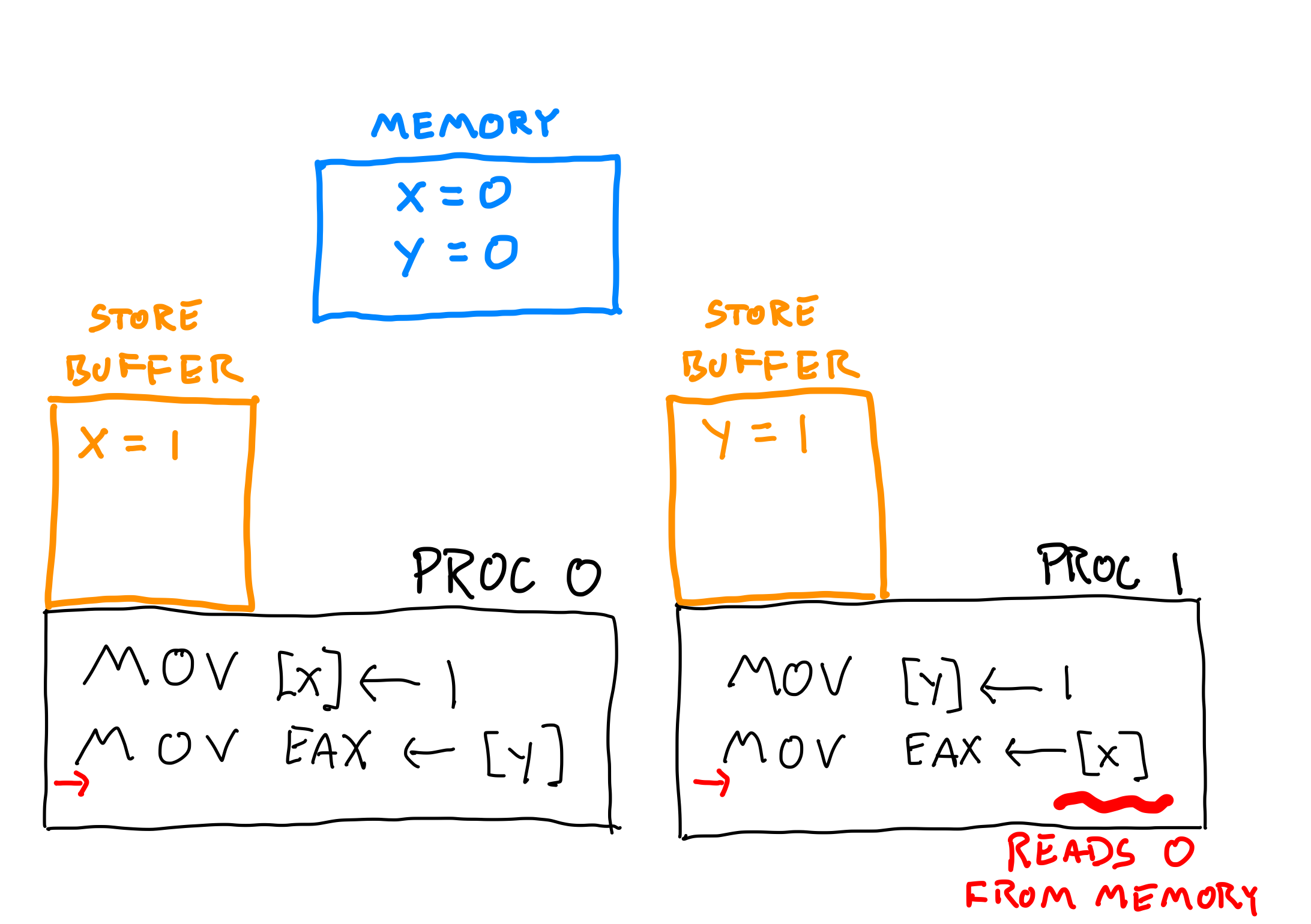

To illustrate how we can end up in the weird state where both processors end up reading 0, we turn to my poor drawing skills:

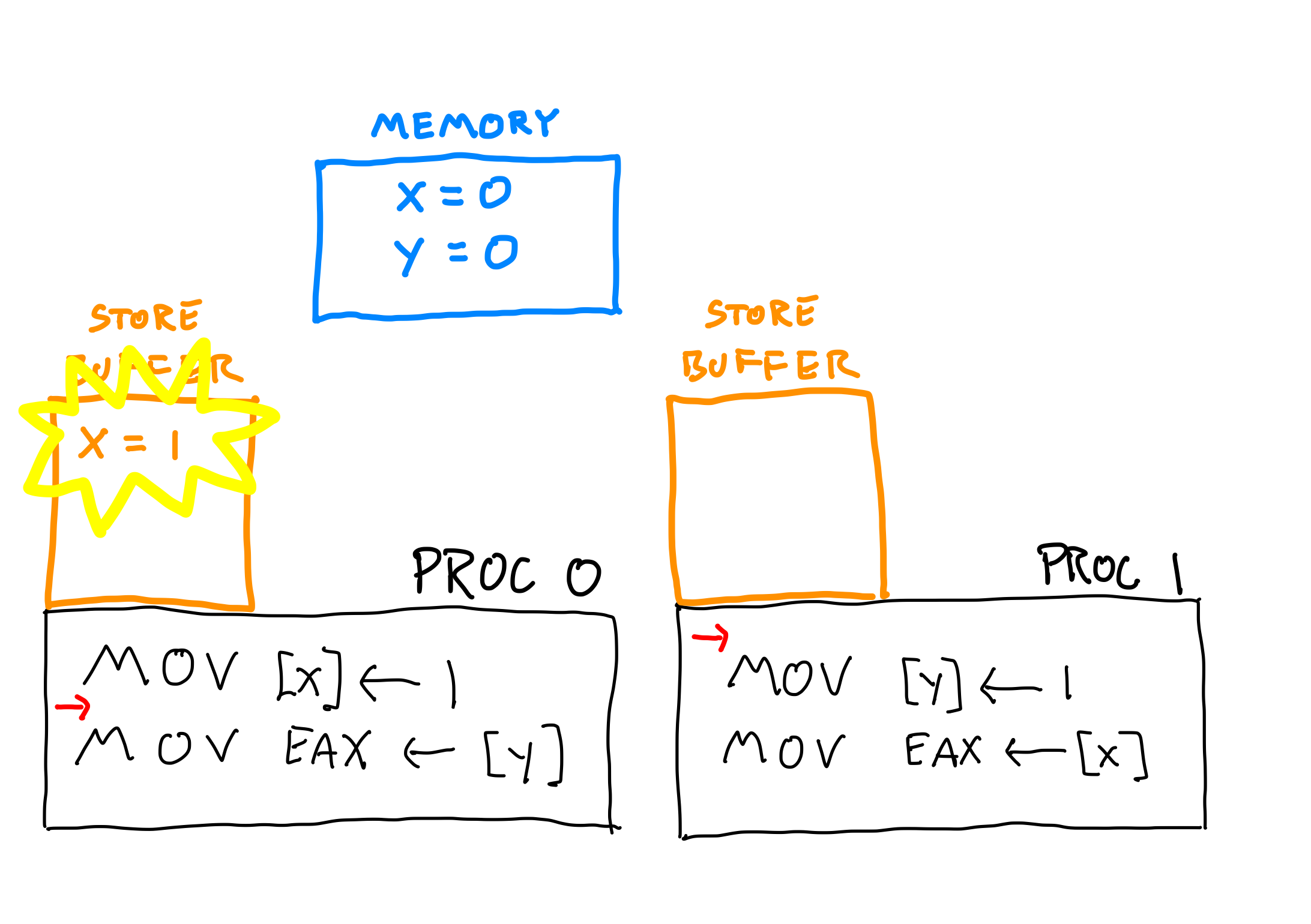

To start, [x] and [y] are both 0 in memory, the store buffers are empty, and neither processor has executed any instructions. Now, processor 0 writes to [x] but it gets buffered in the store buffer.

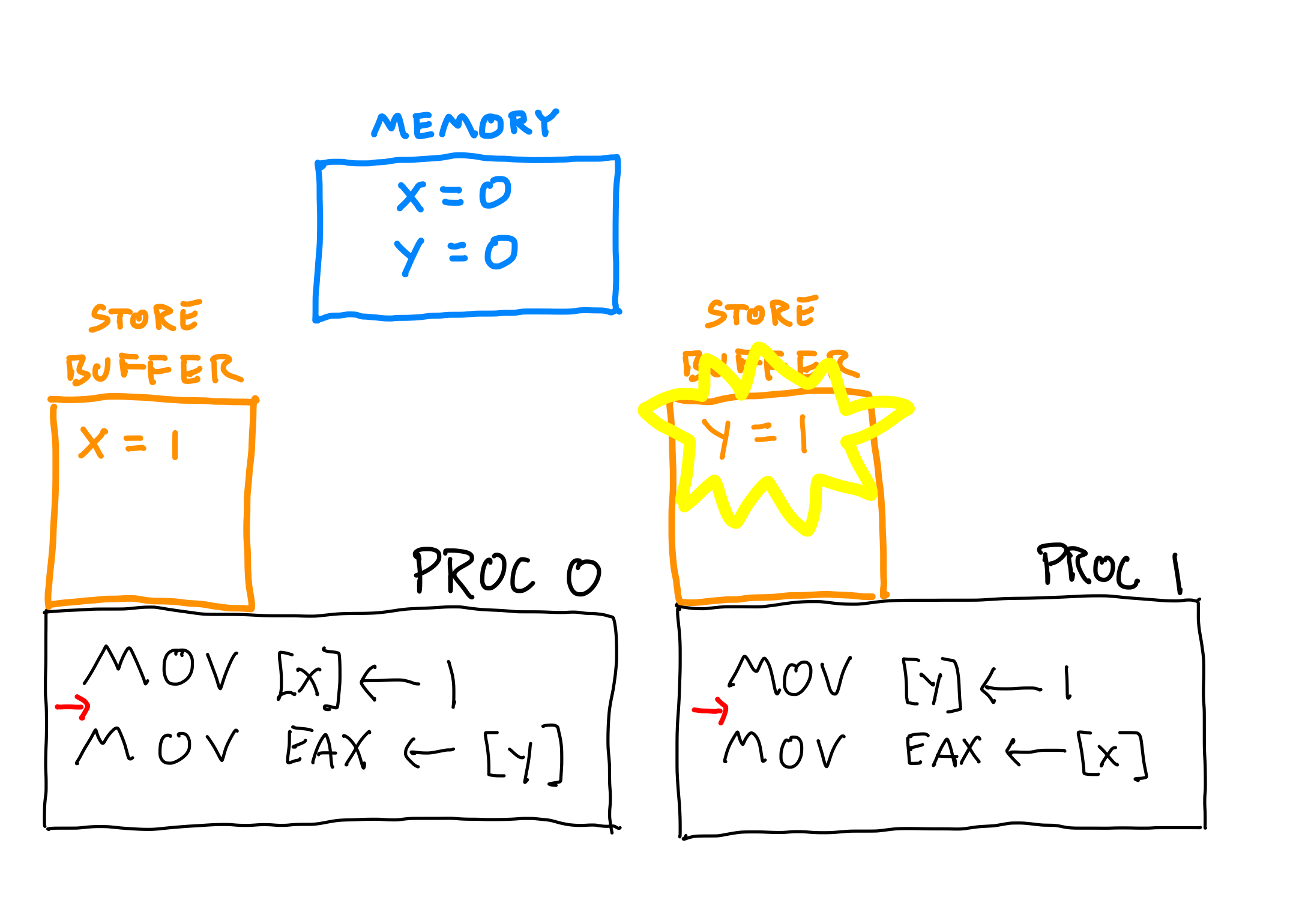

Next, processor 1 writes to [y] but its write also gets buffered in its store buffer.

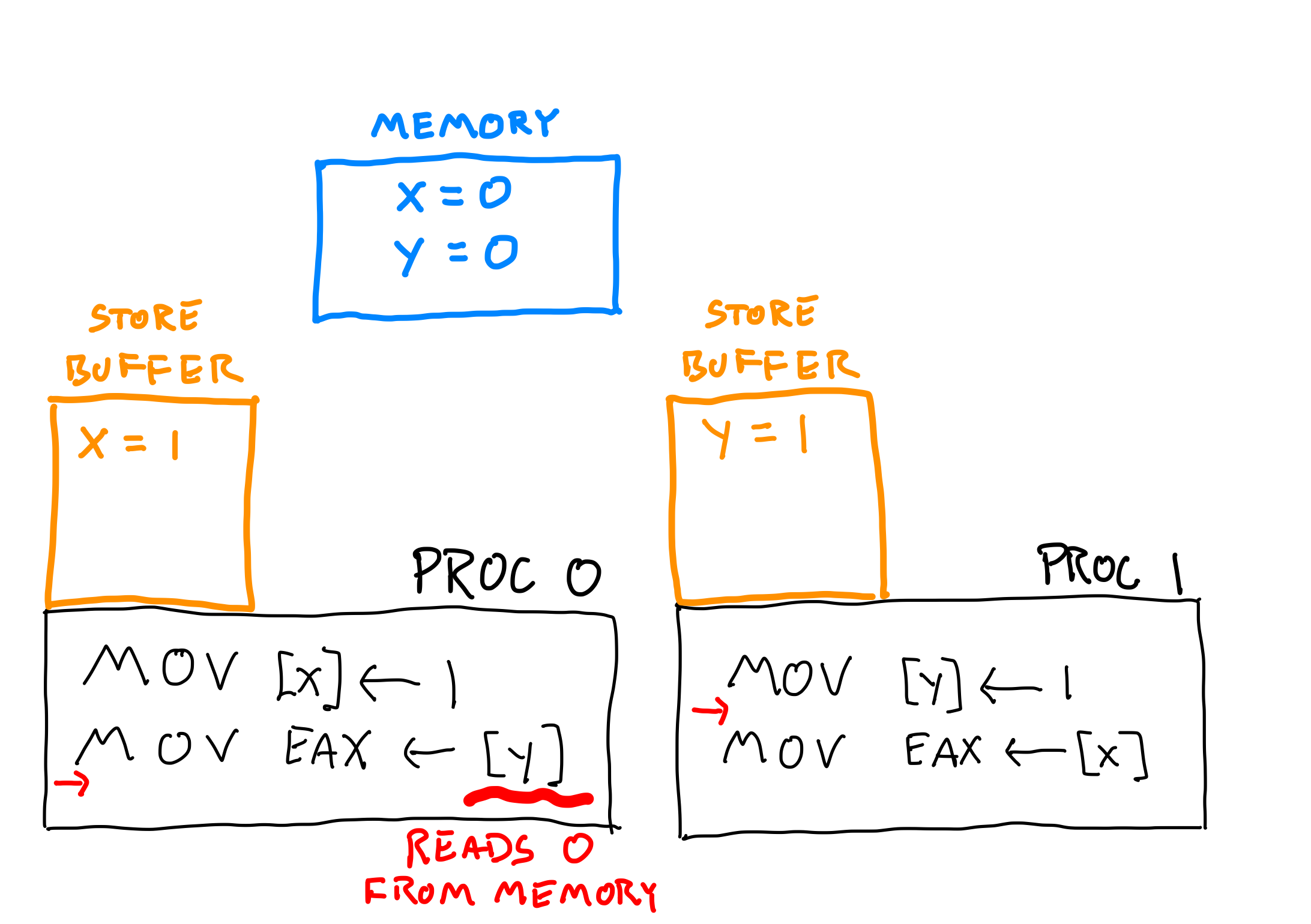

At this point neither write has propagated to main memory. So when processor 0 reads [y] from memory, it’s going to read 0.

And when processor 1 follows suit and reads [x] from memory, it also reads 0.

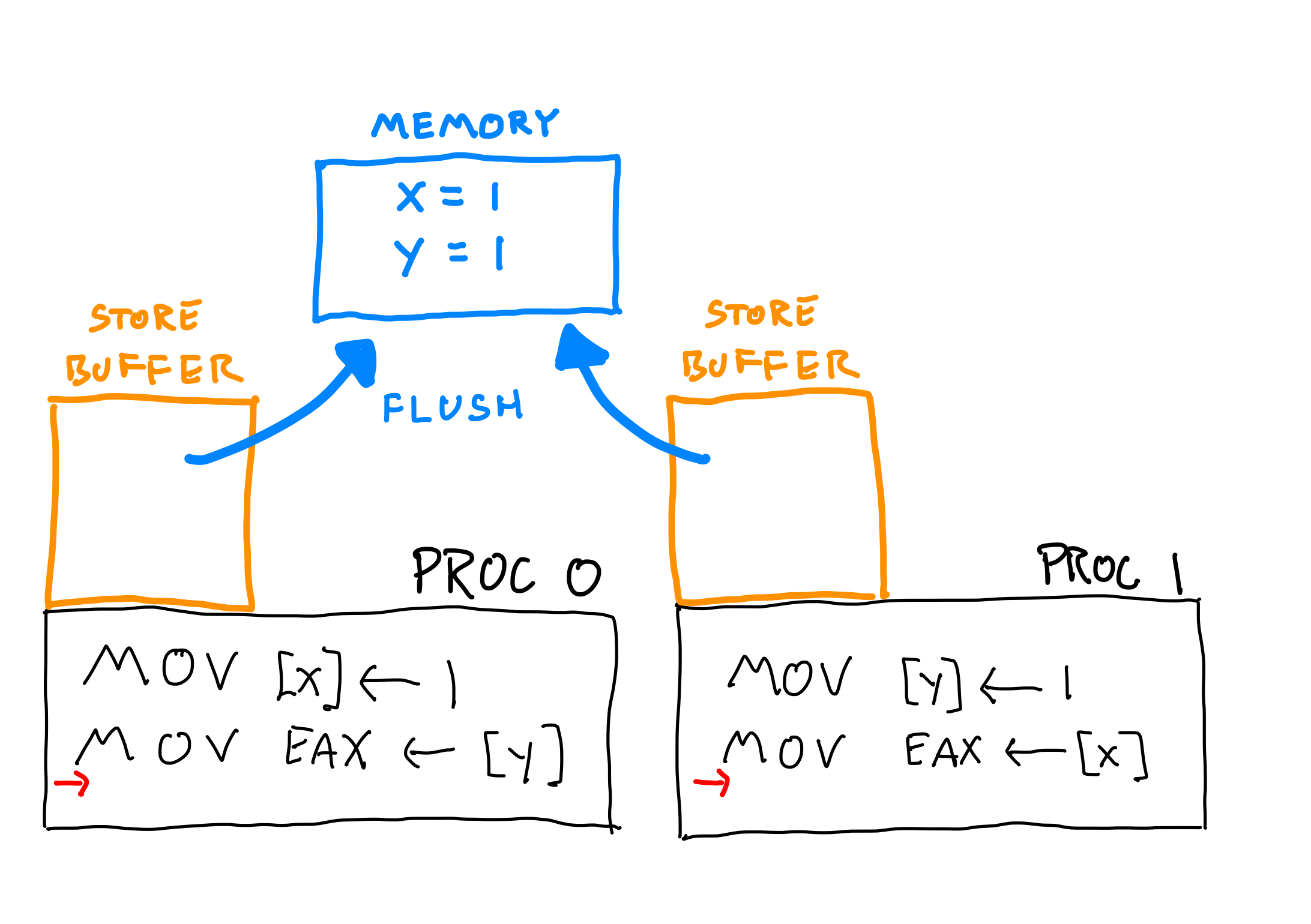

And finally at a later date, the store buffers are flushed to memory.

So this is one possible execution flow that shows how we can end up with both processors reading 0 in the SB example. What’s even more frustrating and confusing is that after the store buffers are flushed, both processors would read [x] and [y] as holding the value 1!

The Rules

Besides bringing up weird execution examples, the paper is also aiming to codify the rules for how an x86 processor behaves. It does so by going through a bunch more super unintuitive examples of weird execution, and how all of them are permitted in either Intel or AMD manuals. The problem is that only some of them actually do occur! But because these manuals are written in inaccurate human prose, it’s very tough to mechanize these descriptions into an actual abstract machine model.

The authors put together a list of rules, that are intuitive for a programmer to reason about, as well as codifying these rules into an abstract machine model called “x86-TSO” which is formalized in the HOL4 Interactive Theorem Prover. They’ve also taken the trouble to write and run tests on actual modern processors. Of course you can’t prove the absence of bugs with tests, but it sure beats “hope” as a strategy.

If you’re interested in the exact rules I’d recommend reading the full paper, and visit their site. But here’s the boiled down version they provide in the paper:

- The store buffers are FIFO and a reading thread must read its most recent buffered write, if there is one, to that address; otherwise reads are satisfied from shared memory.

- An

MFENCEinstruction flushes the store buffer of that thread. - To execute a

LOCK’d instruction, a thread must first obtain the global lock. At the end of the instruction, it flushes its store buffer and relinquishes the lock. While the lock is held by one thread, no other thread can read. - A buffered write from a thread can propagate to the shared memory at any time except when some other thread holds the lock.

If you think about it (a lot), you can see how the example SB execution flow we gave above follows these rules.

They also answer a fun question I had a while ago about whether you really needed LOCK’d instructions. My stupid idea was to not have any locked accesses of variables and instead force each variable onto its own cache line, thereby depending on the memory subsytem for coherency. Now we know that wouldn’t work because store buffers would cause separate threads to read different data, and the unsynchronized flush from the store buffers would of course randomly overwrite data.

Conclusion

Overall this paper was actually a fairly short, entertaining read, especially if you gloss over the part where the paper outlines how CPU manufacturers vigorously handwaved what the invariants of their systems are for a decade. I managed to learn about store buffers, that store buffers can be shared across cores, and gained some guidelines for thinking about x86 hardware level concurrency. Dope!

It was also fun to get paper recommendations and helpful tips from the internet on twitter, so thanks everyone!